Midjourney V7 is finally here!

Plus mind-blowing new camera controls from Higgsfield & Luma Ray2 video

Reading time about 4 minutes.

Welcome to Visually AI!

🔮AI News this Week

Midjourney V7

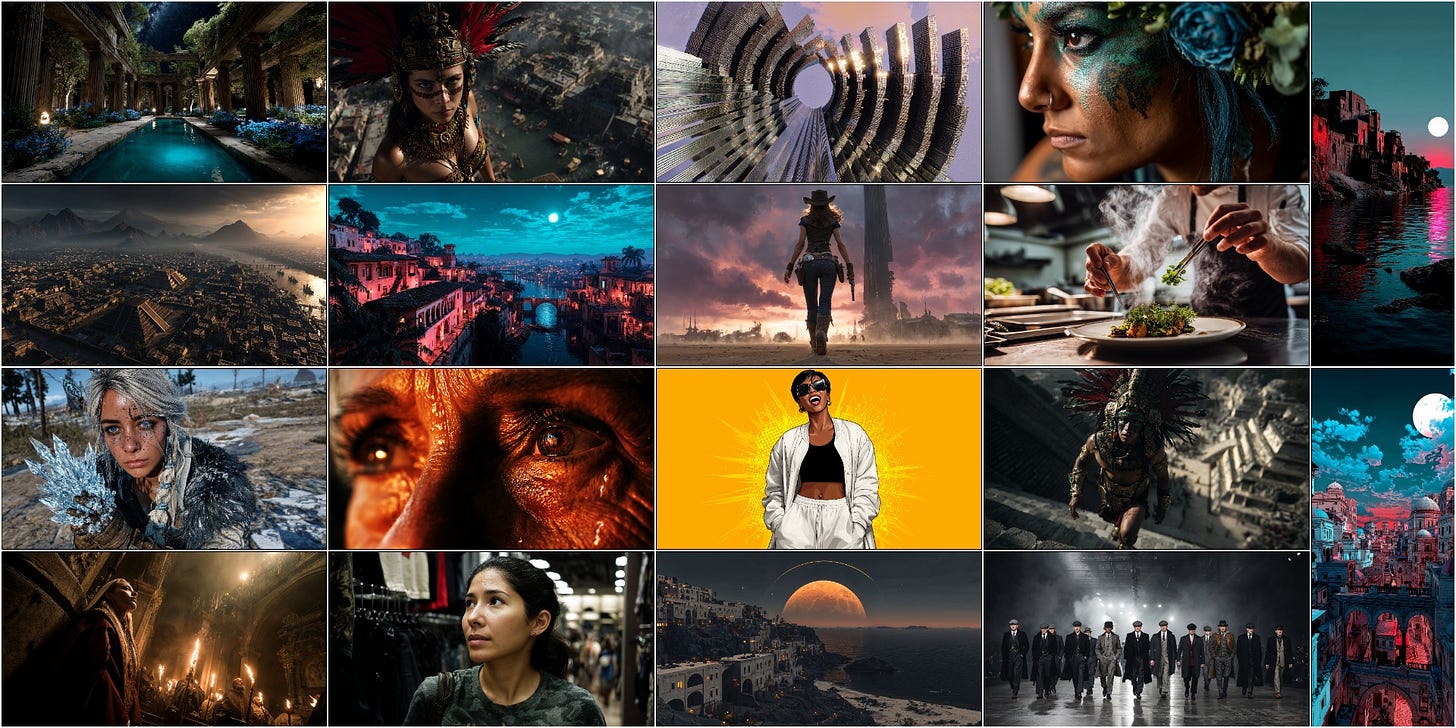

Midjourney finally released the alpha-version 7 with improved image quality (including hands), Draft Mode for quick iterations and responsive to Voice editing commands.

V7 has model personalization (—p code) turned on by default, and you will need to create a new personalization profile before you can use V7. You’ll rank image pairs and it takes about 5 minutes.

You can toggle personalization on and off, plus create multiple profiles that can be used together:

All features are not available for V7, but should be added over the next several weeks. Right now, srefs, moodboards and personalization is available and improving in future updates. Text rendering is worse than V6 but should improve, also.

Not available: Upscale & Editor will fall back to V6 models.

These are some of my first results:

📸 AI Snapshots

Higgsfield Motion Controls

Higgsfield AI released Higgsfield DoP I2V-01-Preview with an incredible menu of camera motion controls, including Bullet Time, Whip Pan, Through Object Out, and many more.

This is not the same as Higgsfield’s original video creation platform, which allowed you to create multiple scenes for longer videos.

The results are mind-blowing! This is an example I generated using “Crane over the head” and “Bullet time”:

Luma Ray2 Camera Motion Concepts

Luma also released Ray2 Camera Motion Concepts with a variety of dramatic shots, like Roll, Truck Left or Right, and Bolt Cam. These motion options can be used in combination - unlike Higgsfield AI.

I used one type of motion on each shot and extended one time: Bolt Cam, Orbit Left

Pikaframes

Pika updated Pikaframes to let you upload up to 5 reference images and transition between each one. It’s a creative way to seamlessly transition between similar images and sequences - but you can use different styles and subjects too.

I included a tutorial demo in the video below:

Krea Video Restyle

Krea’s new Video Restyle lets you upload reference videos and choose from hundreds of styles, or your own new style, with four motion capture controls:

→ Depth: Keeps motion from original depth map

→ Edges: Motion from edges for talking faces or closeups

→ Pose: Human motion - great for static backgrounds

→ Video: Pixel motion in the original with no extra processing

Here is my first try and a tutorial to show you how to do it too:

Udio released v1.5 Allegro, a new model streamlined to generate 4x faster without losing audio quality. And Styles is a new feature to create new songs

🚀 My Recent Top AI Tool Picks

ElevenLabs MCP: Server that enables interaction with powerful Text to Speech and audio processing APIs like Claude Desktop, Cursor, Windsurf, and OpenAI agents

ByteDance Uno: Combine multiple subject reference images into one image maintaining consistency

HiDream-I1 Image: New open source image model with great text rendering. Available in 3 versions - Full, Dev, Fast. Demos available on Hugging Face.

HiDream-I1 Prompt: Candid photography of a southeast asian woman, approximately 30 years old, with realistic skin texture and micro-expressions, standing next to a sign that says "HiDream is here!" on a busy New York City street, daytime

Freepik Video Editor: New video editor in the AI Suite with features including, trimming, adding text, fx effects, blur, audio, and more.

Napkin: New update from Napkin lets you export visuals directly to Google Slides, PowerPoint, Canva, Keynote and more - and you can import your own files into Napkin.

InfiniteYou-FLUX: Create Infinite Photographs of You with Diffusion Transformers (DiTs) like FLUX

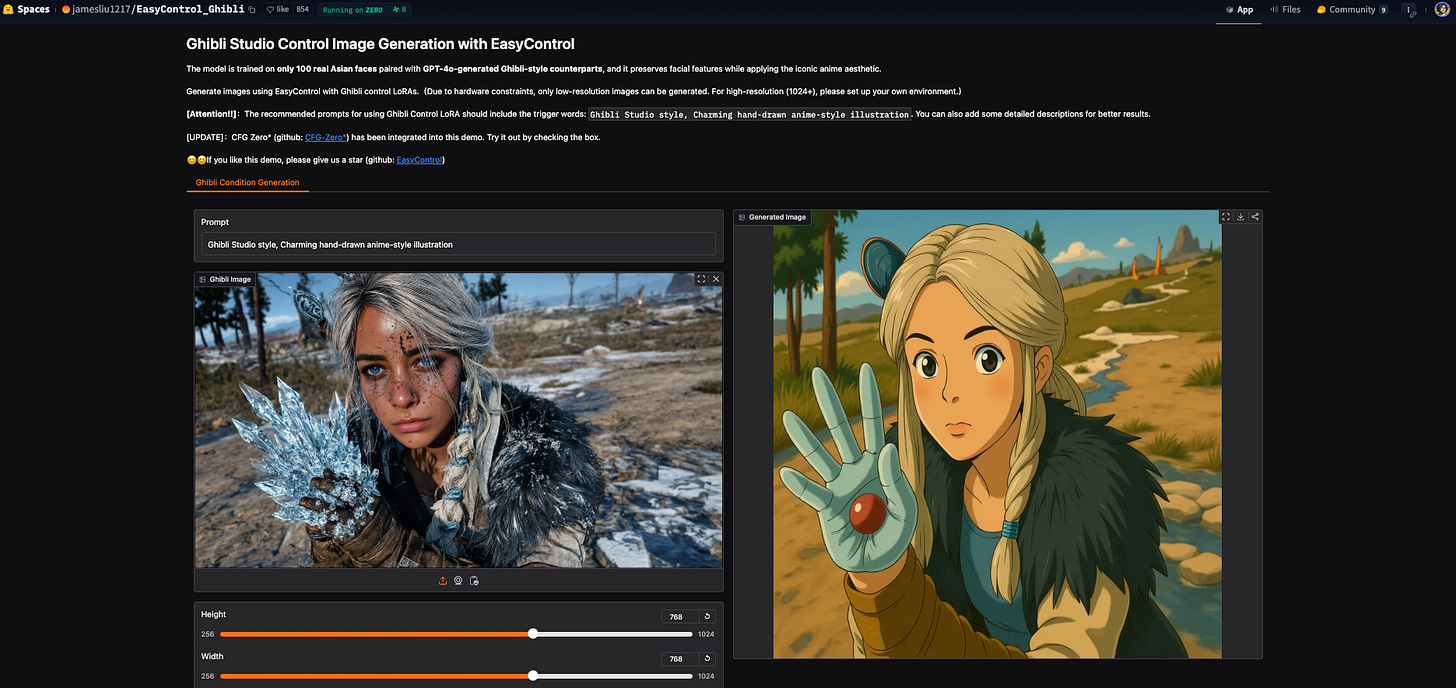

Ghibli with EasyControl: Hugging Face Space generates images based on your uploaded reference image using EasyControl with Ghibli control LoRAs.

📺 Visually AI on YouTube

Krea AI Video Restyle | Quick Demo & Tutorial

🖼️ Image Prompts

Prompt: Medium tracking shot of a Formula 1 car taking a tight corner, tires smoking against asphalt. Driver's determined eyes visible through the helmet visor. Hyperrealistic carbon fiber textures, motion blur on wheels, heat distortion from engine, dramatic dynamic composition, race day lighting

Prompt: First person POV from inside a racing sailboat cutting through massive ocean swells during a storm. Water sprays across the deck as crew members fight to maintain control. Ultra-realistic water physics, authentic nautical details, dramatic weather lighting, immersive camera movement

🎥 Video Prompt

This is an image to video prompt, using Google DeepMind’s Veo 2 on Freepik, with sound effects by Freepik’s Add Sound:

Prompt: A woman strolls along a waterfront promenade at sunset, wearing a flowing striped dress and holding a straw hat. The camera gently tracks alongside her, capturing the serene water and picturesque buildings in the background, with warm golden light enhancing the scene.

Thank you for reading.

Have a creative week!