Natural Language Video Editing Transforms Creative Workflows

& GPT-5 & AI Music updates.

Total reading time about 5 minutes.

Hello {{ subscriber.first_name }}!

Welcome to Visually AI!

Runway Aleph: Video editing with conversational language

You can change an existing video's environment, angle, background, lighting, etc. with simple text prompts.

For this example I used this prompt for the video below: "Change the camera angle to be an aerial drone shot looking down as this woman walks down the narrow street from above"

I uploaded a video and described what I wanted to see: "Change the sea to a busy Athens street with cars and buildings instead of water"

There are some changes to the subject and it's not a busy street, but not bad for a 1-sentence prompt and waiting for a few minutes:

Google Genie 3 Redefines the Boundaries of AI-Generated Worlds

Google DeepMind's Genie 3 fundamentally changes the game by creating interactive 3D worlds you can actually navigate and modify in real-time, not just passive video sequences.

This isn't just another video generator - it's a legitimate world simulator that maintains visual consistency for a full minute at 720p/24fps while responding to user commands and remembering where you've been.

The system already works with DeepMind's SIMA AI agent for embodied AI research, essentially creating unlimited training environments on demand.

Key capabilities:

Generate persistent, explorable 3D environments from text prompts

Modify worlds dynamically with natural language ("make it rain," "add a dragon")

Maintains environmental consistency across extended sessions

Compatible with AI agents for AGI research and training

Models realistic physics, lighting, and ecosystems

Current limitations: 1-minute memory cap, 720p resolution ceiling

Check out this example of an interactive world environment with Genie 3, shared on Google DeepMind’s announcement page:

Prompt: Walking on a pavement in Florida next to a two-lane road from one side and the sea on the other, during an approaching hurricane, with strong wind and waves splashing over the road. There is a railing on the left of the agent, separating them from the sea. The road goes along the coast, with a short bridge visible in front of the agent. Waves are splashing over the railing and onto the road one after another. Palm trees are bending in the wind. There is heavy rain, and the agent is wearing a rain coat. Real world, first-person.

Ideogram - Character

Ideogram's Character feature looks to solve one of AI art's biggest challenges: maintaining the same character across multiple images using just a single reference photo.

Ideogram analyzes facial features, body proportions, clothing style, and distinctive characteristics from one uploaded image, then generates unlimited variations while preserving the character's core identity. The system works exceptionally well with realistic styles and can adapt characters to different poses, expressions, and environments.

Key capabilities:

Single-image character reference system

Unlimited character variations and poses

Strong preservation of facial features and identity

Excellent performance with realistic and semi-realistic styles

Free access for all users on ideogram.ai

Compatible with existing prompt engineering techniques

Take a look at my first test:

Moonvalley Marey

Moonvalley's Marey represents the first commercially safe AI video model, trained exclusively on licensed, high-resolution footage without any scraped or user-submitted content.

Built specifically for Hollywood professionals and commercial studios, Marey delivers cinematic-quality video generation that can withstand legal scrutiny in professional production environments. The system operates natively at professional resolutions and frame rates, with sophisticated understanding of cinematography principles, lighting, and motion dynamics.

Marey's ethical training approach addresses the industry's biggest concern about AI video tools: copyright liability. By using only licensed datasets, the platform provides legal protection for commercial use while maintaining the creative flexibility that filmmakers demand.

Key capabilities:

100% licensed training data for commercial safety

Professional-grade cinematography and lighting

Native high-resolution output up to 4K

Advanced motion control and camera movement

Integration with existing film production workflows

Legal protection for commercial applications

Grok Imagine & Video

Grok Imagine was also recently launched with a lot of noise being made on 𝕏.

The platform's image-to-video pipeline starts with either generated or uploaded images, then transforms them into dynamic 15-second clips with native audio generation. Unlike competitors with strict content policies, Grok Imagine allows creators to explore expressions without censorship causing some concern with “spicy mode”.

It was also announced recently that it is coming to the web as it was currently only available on mobile.

I will keep an eye to see how Grok develops as quality is closer to the original launch of other video models with a preference of speed over quality generation.

Chat GPT-5

OpenAI's ChatGPT 5 recently launched, and perhaps was overall a little underwhelming to the general consensus - I would encourage you to use the thinking mode! I have had great results with this.

GPT 5 integrates text, image, audio, and video understanding within a single unified model.

With the model's ability to reason spatially and visually while maintaining context across multiple modalities; ChatGPT 5 can analyze complex visual compositions, suggest design improvements, and generate detailed creative briefs that bridge the gap between conceptual ideas and executable visual projects.

For creative professionals, the standout feature is enhanced spatial awareness in image generation and analysis. The model demonstrates sophisticated understanding of interior design principles, architectural relationships, and visual hierarchy, making it valuable for everything from mood board creation to technical design feedback.

Some users have also expressed frustration that unless you are on the highest tier paid plan you cannot return to old models which they actually prefer (I believe this is now fixed with the option to return to 4o becoming available).

Suno - V4.5+

Suno's V4.5+ update focused on improving vocal quality, expanded genre understanding, and sophisticated mashup capabilities.

The improved vocal engine delivers performances with better breath control, pitch accuracy, and natural phrasing. The system can now understand over 100 distinct musical genres and can and blend the styles by identifying shared musical DNA rather than simply layering elements.

V4.5+ introduces three professional audio production tools: advanced mixing controls, dynamic range optimization, and real-time collaboration features. The model can now generate longer compositions with better structural coherence, making it suitable for commercial music production and professional creative workflows.

Here’s an example instrumental I created:

Eleven Labs

And just as Suno was making moves, ElevenLabs themselves decided to expand beyond voice synthesis with Eleven Music, introducing the first AI music platform that treats songs as editable compositions rather than static outputs.

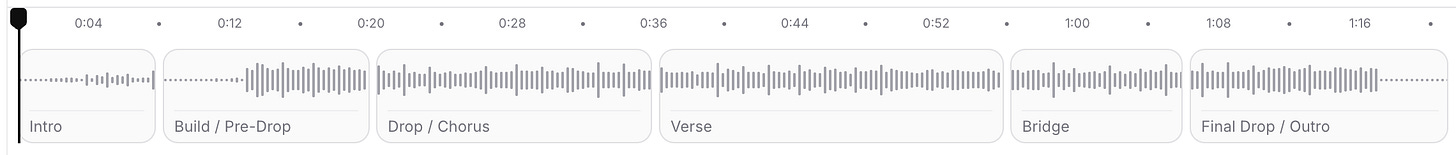

The breakthrough feature is section-level generation and editing directly from the waveform interface. Unlike traditional AI music tools that generate complete tracks, Eleven Music lets you reorder, rewrite, and remake individual sections: verses, choruses, bridges - without regenerating the entire song.

The platform also addresses the industry's biggest legal concern with comprehensive licensing agreements through Merlin and Kobalt, covering thousands of independent artists and songwriters.

Key capabilities:

Section-by-section generation and editing from waveform interface

Multilingual lyric support with natural language style control

Real-time streaming during generation for immediate feedback

Commercial licensing cleared through major industry partnerships

Seamless transitions and precise mood shifts between sections

End-to-end workflow from concept to finished, rights-cleared track

Note: ElevenLabs are still expanding genre coverage, attribution requirements for commercial use

Example song:

🖼️ Image Prompts

Prompt: Candid full-body photo of an Afro-Latina in her late 30's with wavy brown hair, wearing professional teal blue scrubs, wearing white tennis shoes in a hospital hallway background.

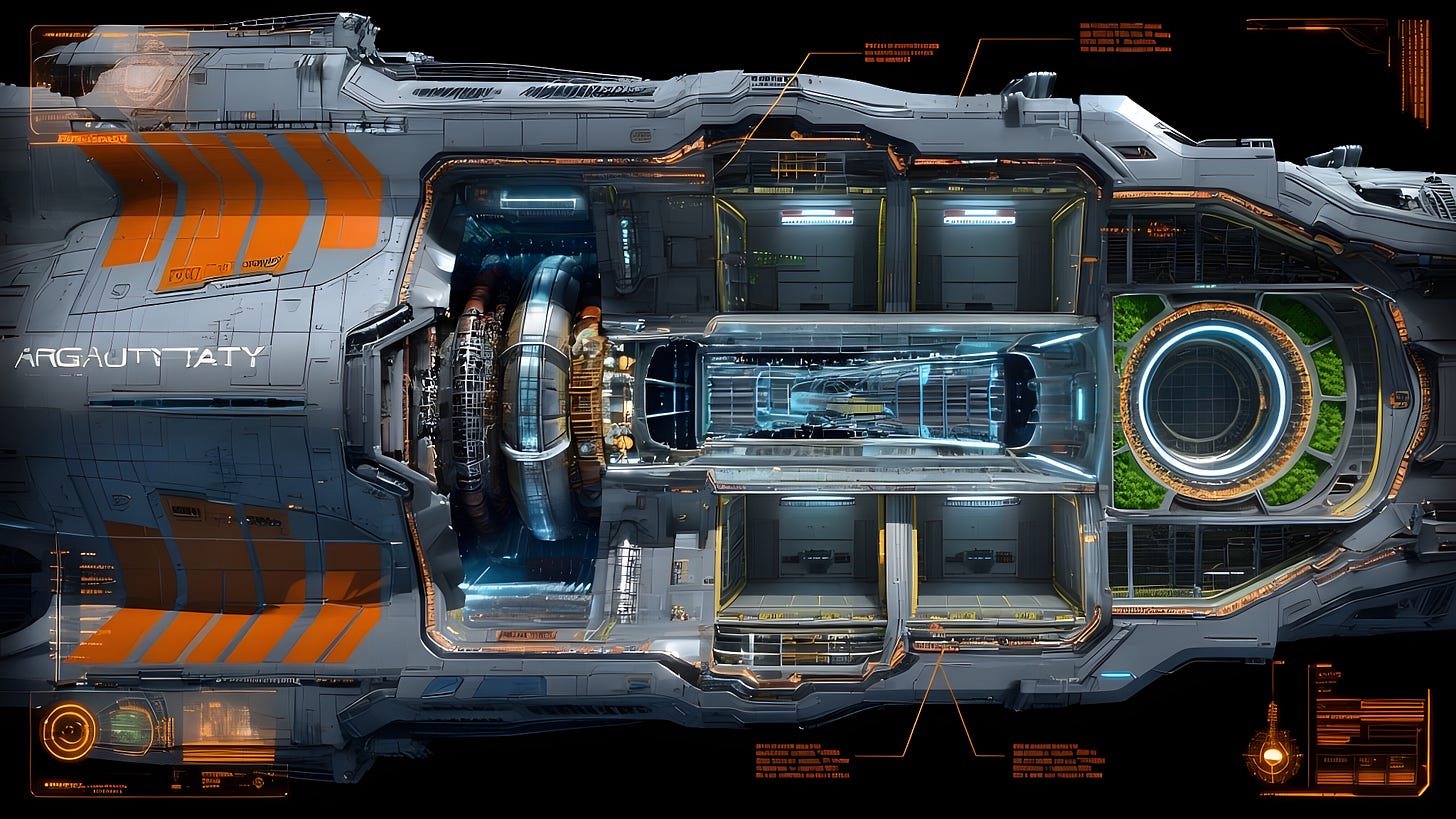

Prompt: Interstellar Battlecruiser "Argonaut" cinematic star-ship cutaway, showing antimatter reactor, warp coils, hangar bays, hydroponic ring; stylised sci-fi technical plate, cool steel palette with orange hazard stripes, holographic HUD readouts annotated. Photoreal, highly detailed, realistic textures, 8k resolution

📽️ Video Prompts

Prompt: A fixed medium shot lingers on a Tesla charging port in a silent, concrete garage. Arcs of electricity burst from the port, weaving intricate patterns that form a hovering wireframe Tesla vehicle, angled toward a clear forward trajectory. As the arcs pulse brighter, solid panels form around the frame in perfect alignment with its direction. The now-photorealistic Tesla settles onto the ground. The garage environment pixelates and reshapes into a glowing cyberpunk cityscape, as the vehicle begins to glide forward along a holographic lane. The camera transitions into a smooth tracking shot, following the Tesla. No voiceover, no text

- Veo 3

Prompt: cinematic, effortlessly cool woman wearing oversized gradient-tint 70s sunglasses, feathered hair, warm sunlight with soft haze, muted earth tones and golden yellows, vintage grain texture, flared denim jacket, subtle lens flare, authentic film look, background of a classic convertible parked by a palm-lined street, relaxed and timeless vibe

- Midjourney Video

Thank you for reading.

Hope you have a creative week!

Hi Heather, I was wondering if you would be interested in participating in our research about the future of AI in Creative Industries? Would be really keen to hear your perspectives. It only takes 10mins and I am sure you will find it interesting.

https://form.typeform.com/to/EZlPfCGm